If AI does all our work, what will be left for us to do? The achievements of AI providers are astonishing. And they are getting better and better. Above all: What has been developed already but not been released yet? And what is currently under development? And what are AI engineers thinking about today?

No matter which direction we look, the effects are impossible to predict. As speaking for software development, it is almost impossible to go without intelligent support.

And that’s just the tip of the iceberg, the beginning. When intelligent software is available for everything, humans will no longer be needed. Only the developers of AI. So for software developers, it’s clear – learn Python quickly and dive into LLMs.

But what about lawyers? Or tax advisors? Intelligent image recognition have long been used in medicine, with resounding success. Tumors that the radiologist did not detect were discovered.

But beware: doesn’t AI also have hallucinations? Yes, of course, sometimes it makes up completely fictitious products. Facts are also constructed. But that’s all just a transition. The designers use what is known as “reasoning” to explain the AI’s outputs. Anything that does not pass through this filter is not output at all.

So yes, but the hallucinations are becoming fewer and fewer and the AI is becoming smarter and smarter.

Creativity? Yes, only humans can do that? That’s no longer true. Intelligent image programs can produce artistic works from any text input that are indistinguishable from those created by humans.

Oh, that’s where authorship comes into play. How does AI know everything it knows? From texts and images created by real people. And they also have rights to the works they create – or don’t they? What rights does AI have to the texts it creates itself? The battle is still raging on this front – the outcome is still open.

Science offers us an example of this system. Here, texts are referenced, quoted, and used by other people. It is not without reason that citation errors are frowned upon in scientific practice. Could this become a template for AI? Ensuring good scientific practice means that ALL references, quotations, and copies must be listed precisely also by the artificial intelligence. So even when it quotes itself? This is a vicious circle. However, this is the same thing that often happens in science – bloat effects included. From a purely technical point of view, it is now possible to create a scientific paper using clever prompts without having to write a single word yourself.

Who is responsible?

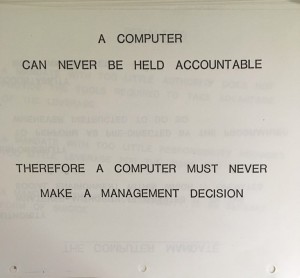

A post on the internet expresses what we all know: a computer cannot be held responsible for decisions. After all, it is “only” a computer, right?

Perhaps this will change in the future—but which computer could eventually bear the financial burden that could result from a wrong decision? And then there is another problem: responsibility means that there is an explanation for the “why” behind a decision. A computer could explain its decision from a purely technical standpoint, but it cannot take responsibility for it. With humans, it is rather the opposite: they take responsibility for something, but cannot explain how they arrived at a decision.

We will have to find a way out of this dilemma.

What remains for us?

Microsoft, whose rights we explicitly recognise, writes the following introduction when installing Windows 11 on the subject of its AI integration called Copilot:

With Copilot, you get personal insights and can make informed decisions!

That says it all. All we are left with are insights. How and why these come about is another matter. But we get them, and that’s something.

And then we can make “informed decisions.” Decisions at work, in our private lives, for our investments, and for everything else. However, Microsoft leaves open what is meant by “informed.”

We have the answer: Informed means that options or alternatives are listed. It means that the criteria for a decision are known. And that these criteria can also be quantified and reviewed for each option. Is it all that simple?

Not quite. There is still our gut feeling. AI leaves us with a strange feeling. But what does that mean for our use of these systems? And what decisions do we make based on these feelings?

For us, it is clear: options and criteria can be found and listed with the help of AI. Evaluations are also possible to a limited extent. But concerning weighting, our personal assessments come into play.

And when it comes to qualitative criteria such as creativity, sympathy, or quality, AI fails completely. Because these criteria can only be evaluated by humans.

But we are prepared for that. With PhænoMind.

You might also like

-

Deciding with your gut feeling?

Published on

-

Your Environment

Published on

-

PhaenoMind Version History

Published on

-

Decision-making generates Responsibility

Published on

-

Brain Balance

Published on